Next steps for LLMs

Open source, AutoGPT, Multimodel, and more…

2025-02-01

medium-large language models

With Large models come large bills

- Big Tech Struggles to Turn AI Hype Into Profits

- Microsoft reportedly makes a loss of 20 dollars per month on average, for every Github Copilot user with a 10 dollar subscription.

- Numbers for OpenAI might be similair for ChatGPT+ users.

- Running LLMs is a very costly endeavour

A case for small large language models

- Retracted CodeFusion: A Pre-trained Diffusion Model for Code Generation possible leaked size of ChatGPT-3.5-Turbo:

- 20B parameters

- Deemed likely by experts due to model response speed

- Open source models at 13B appear to be somewhat comparable

- OpenAI appears to be shifting towards smaller faster models

A case for small large language models

- Gopher is a 280B parameters model.

- The road to improvement has become more data, not more parameters.

- Data may now be the bottleneck, even given the incredibly large datasets.

- Most papers are rather vague on their data collection…

A case for small large language models

- Smaller language models:

- Are faster

- Less expensive

- Wouldn’t require GPU-clusters.

- Could lead to more personalised AI in the long run…

- … everybody has their own small-LLM as a personal assistant

A case for small large language models

- Retracted CodeFusion: A Pre-trained Diffusion Model for Code Generation possible leaked size of ChatGPT-3.5-Turbo:

- 20B parameters is still 40GB of VRAM:

- Most companies don’t have the hardware to run these models

- Consumers especially don’t have the hardware to run this

- You want to get them even smaller

- 20B parameters is still 40GB of VRAM:

- The open source community already figured out how to do just that.

open source

A case for open source

- Google “We Have No Moat, And Neither Does OpenAI”

- open source is “faster, more customizable, more private, and pound-for-pound more capable.”

- Researcher claims open source will outpace Google, Openai

- “the one clear winner in all of this is Meta”

A case for open source

- Open source developments on LLama Models as released by Meta:

- LORA finetuning of models on consumer laptop.

- Quantisation makes LLama run at usable tokens/second on consumer laptop.

- Achieved parity with 5 million dollar models with 600 dollars

Why is open source interesting for you?

- No third party = No need to share private/proprietary data

- Cheaper to use

- Full control to make your own domain specific model (hard)

- You can add your own improvements in the open source ecosystem

Why open source might be less interesting for you

- Require specialised hardware for best performance

- Not user friendly compared to paid models

- Not SOTA (even though it comes close)

More and more open source models …

See what is trending on huggingface.co/

Most models are released in half-precision (16 bit):

- 3B parameters ~ 2.25 GB of (V)RAM

- 7B parameters ~ 14 GB of (V)RAM

- 13B parameters ~ 26 GB of (V)RAM

- 20B parameters ~ 40 GB of (V)RAM

- 70B parameters ~ 140 GB of (V)RAM how to run these huge models?

… and quantisation makes them more usable

See what is trending on huggingface.co/

The same models at 6-bit quantisation are:

- 3B parameters ~ 6 GB of (V)RAM

- 7B parameters ~ 5.25 GB of (V)RAM

- 13B parameters ~ 9.75 GB of (V)RAM

- 20B parameters ~ 15 GB of (V)RAM

- 70B parameters ~ 52.5 GB of (V)RAM

This makes all models except the 70B model usable on high end consumer hardware. This makes all models except the 70B model usable on low to mid-range professional hardware.

Try out huggingchat

How to find the best model?

Next Steps

Check out the amazing content made by Andrej Karpathy

Summary

- System 2 thinking

- Self supervising

- LLM OS

LLM OS - Multimodal models

Multimodal models - Vision

LLM OS - Live demo

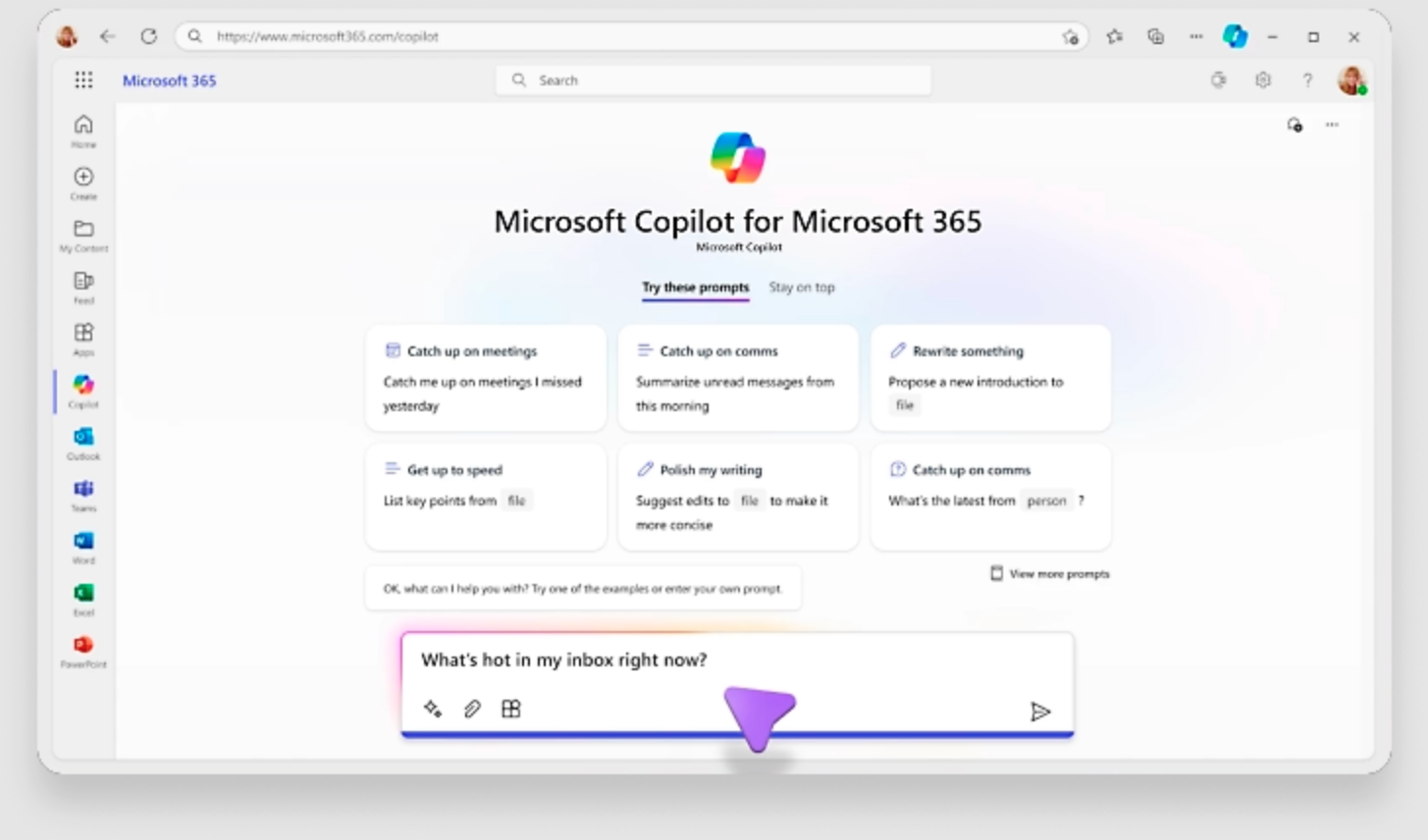

LLM OS - Microsoft Copilot

Reinforcement Learning - Self-Improvement

- Currently, LLMs models are only learning language true human feedback (imitation)

- One of the big innovations in Reinforcement Learning is Self-Improvement:

- For example playing games against yourself to keep self improving

- Unclear how to achieve this for Language

- If possible for domain specific tasks, could propel super-human capibilities of LLMs.1

Reinfocement Learning for reasoning

2 big models in recend days:

Allow for allocating extra test-time compute:

- More number crunching before answering a question

- Works a lot better for hard problems

- The models themselves allocate the compute, and learn to do this via reinforcement learning

Math mode ‘on’

- Deepmind: LLM based model is now better than in geometry problems than average gold-medalists in the International Mathematical Olympiad (IMO)

AutoGPT - An Autonomous GPT-4 Experiment

- 🌐 Internet access for searches and information gathering

- 💾 Long-term and short-term memory management

- 🧠 GPT-4 instances for text generation

- 🔗 Access to popular websites and platforms

- 🗃️ File storage and summarization with GPT-3.5

- 🔌 Extensibility with Plugins

LLM Agents are becoming more mainstream

Mainstream AI agent are expected to be one of the next big steps:

Sparks of AGI?

Sparks of AGI?

Sparks of Artificial General Intelligence: Early experiments with GPT-4

“The central claim of our work is that GPT-4 attains a form of general intelligence, indeed showing sparks of artificial general intelligence. This is demonstrated by its core mental capabilities (such as reasoning, creativity, and deduction), its range of topics on which it has gained expertise (such as literature, medicine, and coding), and the variety of tasks it is able to perform (e.g., playing games, using tools, explaining itself, …). A lot remains to be done to create a system that could qualify as a complete AGI. We conclude this paper by discussing several immediate next steps, regarding defining AGI itself, building some of missing components in LLMs for AGI, as well as gaining better understanding into the origin of the intelligence displayed by the recent LLMs.”

- Overblown claim or a vision for the future to come?

- Let’s first find a definition AGI…

Next steps for LLMs